–

Technical Excellence Recognized by the Robotics Research Community

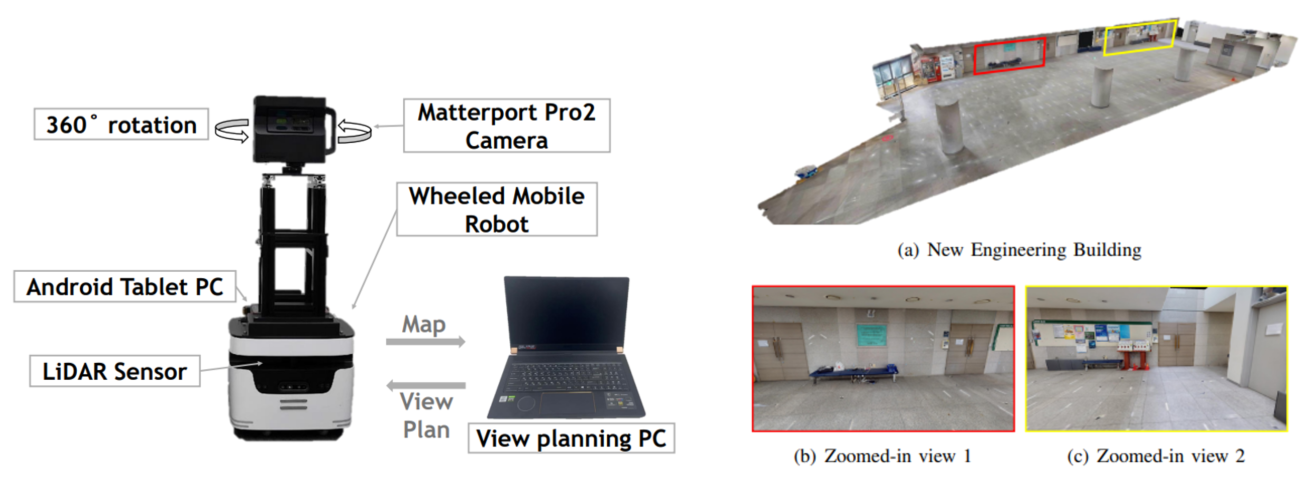

Among the accepted papers, Ewha Womans University’s “Scanning Bot: Efficient Scan Planning using Panoramic Cameras” integrates the Orbbec Astra module (embedded within the Matterport Pro2 system) for high-precision indoor 3D scanning and reconstruction—significantly improving scanning efficiency and texture fidelity.

Shanghai Jiao Tong University’s Zero-Shot Temporal Interaction Localization for Egocentric Videos” introduces EgoLoc, a novel approach for localizing human-object interaction moments in egocentric video. The Orbbec Femto Bolt camera provided essential 3D spatial data for training, enabling the model’s robust temporal understanding and motion reasoning.

Source: Lee, E., Han, K. M., & Kim, Y. J. (2025). Scanning Bot: Efficient Scan Planning using Panoramic Cameras.

Orbbec’s cameras offer open SDK ecosystems, tunable depth quality, stable multi-camera synchronization, and resilience under challenging conditions such as low light, low texture, or reflective materials—capabilities that enhance both reproducibility and deployment readiness for academic and industrial research.

Embodied AI Defines Core Trends at IROS 2025

IROS 2025 places a strong focus on Embodied AI, emphasizing the deep integration of perception, cognition, and action in robotic systems. Key themes include UAV autonomy, autonomous driving, large language models, dexterous manipulation, and embodied intelligence. Workshops spotlight multimodal perception—combining tactile, visual, and linguistic cues—to enable adaptive, dexterous robotic behavior in complex environments.

Within this context, the two studies employing Orbbec technology illustrate the two points of frontier research:

-

Interaction understanding: EgoLoc explicitly incorporates depth and dynamic priors—such as 3D hand velocity and contact/separation cues—into the model’s reasoning process, enabling stable detection of “when to interact” under zero-shot or few-shot conditions.

-

Scanning and reconstruction: Scanning Bot applies a systematic design framework of “visibility constraints → viewpoint minimization → path feasibility → total duration optimization,” achieving end-to-end scanning workflows with coverage exceeding 99%.

These works mirror several emerging consensus trends shaping the evolution of robotics technology at IROS 2025:

-

Multi-camera consistency is becoming essential. Stable extrinsics, accurate timestamps, and low-jitter triggering form the backbone of temporal reasoning and panoramic stitching.

-

Localized open ecosystems outperform black-box integration. ROS 2, Unity/Unreal support, modular data pipelines, and tunable depth parameters are now key evaluation criteria for vision system architectures.

-

Scene robustness and consistency benchmarking are gaining prominence. Stability under low light, low texture, and reflective materials—as well as task-level KPIs like coverage, overlap, and total duration—are increasingly integrated into research metrics and evaluation protocols.

Across these trends, 3D vision is evolving from a mere sensing tool into a vital bridge between the physical world and AI decision layers—a cornerstone for embodied intelligence.

Academic roots, industrial reach

Widely regarded as the “Olympics of robotics research,” IROS has, since 1988, driven global advances in intelligent robotics through deep academic rigor and industry collaboration. The 2025 conference gathers more than 7,000 experts, scholars, and professionals from around the world to showcase breakthroughs that define the future of robotics.

As a leading provider of robotics and AI vision, Orbbec has built a comprehensive technology portfolio spanning structured light, iToF, stereo, dToF, LiDAR, and industrial 3D metrology, serving over 3,000 customers worldwide. The company actively supports research and open collaboration, participating in the China 3D Vision Conference (China3DV) for consecutive years and engaging with leading global conferences such as CVPR. By providing foundational 3D vision infrastructure, Orbbec enables researchers and industry partners to focus on what comes next: smarter, more capable robots.

References:

[1] Lee, E., Han, K., & Kim, Y. (2025). Scanning Bot: Efficient Scan Planning using Panoramic Cameras. IROS 2025.

[2] Zhang, E., Ma, J., Zheng, Y., Zhou, Y., & Wang, H. (2025). Zero-Shot Temporal Interaction Localization for Egocentric Videos. IROS 2025.