Integrated 3D Vision Systems: From Factory Floors to Virtual Worlds

The complete guide to depth sensing technology for robotics, AI vision, and industrial automation

Integrated 3D vision systems—technologies that enable machines to see, understand, and interact with three-dimensional environments—are reshaping industries from manufacturing to augmented reality. No longer confined to research laboratories, these systems now deliver measurable value on factory floors, create immersive digital experiences, and power the next generation of autonomous systems.

Understanding this groundbreaking technology helps developers and business leaders stay ahead in an increasingly automated and digital world. This comprehensive guide explores how 3D vision works, its key applications, and how to select the right solution for your needs.

What Are Integrated 3D Vision Systems?

Integrated 3D vision systems combine specialized hardware and software components to capture, process, and interpret three-dimensional spatial data. Unlike traditional 2D cameras that provide flat, two-dimensional images, 3D vision systems deliver rich, volumetric information about objects, environments, and spatial relationships.

At the foundation of every integrated 3D vision system is a combination of specialized sensors and processing modules that work together to create a complete understanding of the physical world.

- Depth Sensors

Form the primary data acquisition layer, utilizing Time-of-Flight (ToF), structured light, or stereo vision technologies to measure distance from the camera to objects in the scene.

- RGB Cameras

Capture traditional color information alongside depth data. This dual-sensor approach enables machines to measure, recognize, and classify objects with contextual color and texture data.

- Infrared Projectors

Emit invisible IR patterns or pulses that interact with the environment, enabling depth measurement even in challenging lighting conditions—critical for industrial settings and outdoor applications.

- Onboard Processing

Computational engines that analyze sensor data in real time. Modern integrated systems process data locally, resulting in less latency and faster decision-making.

- SDKs & APIs

Robust software development kits provide the essential APIs and libraries that allow developers to quickly integrate sensor data into their applications across multiple platforms.

How 3D Depth Perception Works

3D depth perception works in different ways depending on the chosen technology, with Time-of-Flight sensors, structured light, and stereo vision being the three primary approaches to consider for your application.

Time-of-Flight (ToF)

Measures depth by calculating the time it takes for modulated infrared light to travel from the sensor to an object and return. Delivers rapid depth acquisition at 30-60+ fps across entire scenes.

Best for: High-speed applications

Structured Light

Projects known patterns (grids or stripes) onto scenes and analyzes how patterns deform when reflected. Offers lower cost, simpler structure, and excellent precision for detailed measurements.

Best for: Quality inspection

Stereo Vision

Mimics human binocular vision with two cameras positioned apart, calculating depth through disparity analysis. Adapts to diverse scenarios with active or passive modes.

Best for: Versatile environments

Stereo Vision: Versatility for Any Environment

Stereo vision systems offer exceptional flexibility by adapting to diverse application scenarios. They can operate passively when ambient illumination is sufficient, switch to active mode with IR projection in low-light environments, utilize IR camera pairs for indoor applications requiring consistent performance, or employ color cameras for outdoor deployments where natural lighting is abundant.

Orbbec Gemini 330 Series Featuring the advanced MX6800 depth engine ASIC, the Gemini 330 series integrates active and passive stereo vision to work reliably both indoors and outdoors—unaffected by sunlight. Available in multiple configurations including Gemini 335, Gemini 335L, and Gemini 335Le with Ethernet connectivity.

Time-of-Flight: Speed and Precision

ToF sensors perform best in situations that require rapid depth acquisition across entire scenes. The sensor emits modulated infrared light, which reflects off objects and returns to the sensor. The system measures the phase shift or time delay to calculate distance with extreme speed.

Orbbec Femto Bolt & Femto Mega Built with Microsoft’s industry-proven iToF technology, the Femto Bolt offers a 1 megapixel depth sensor with 120° FOV, 4K RGB with HDR, and depth range from 0.25m to 5.46m. The Femto Mega adds built-in NVIDIA Jetson Nano for onboard processing.

Structured Light: Precision at Lower Cost

While structured light systems typically operate at lower frame rates than ToF and can be affected by ambient light (especially sunlight), they offer significant advantages: lower cost, simpler structure, smaller form factor, and reduced power consumption—all while maintaining excellent accuracy for tasks like quality inspection and dimensioning.

Orbbec Astra 2 The Astra 2 provides high-quality depth data from 0.6m to 8m at up to 1600×1200 resolution at 30fps. With precision of ≤0.16% at 1m, it’s ideal for body scanning, quality control, and rehabilitation applications.

Turning Spatial Data Into Actionable Insight

Raw depth data consists of millions of 3D points known as a point cloud. To gather actionable intelligence from the point cloud, sophisticated processing transforms this data through multiple stages.

3D spatial mapping organizes raw data into coherent spatial structures, creating mesh representations that approximate object geometry. 3D model mapping then matches observed spatial data against known object models or learns new models from the data. Finally, semantic labeling assigns meaning to spatial regions—identifying each point as part of a workpiece, tool, human hand, or background clutter.

With semantic understanding, systems can transform raw geometry into actionable insights. Machines make intelligent decisions about how to interact with their environment based on complete spatial awareness.

Applications of Integrated 3D Vision Systems

From the structured environment of manufacturing facilities to the dynamic, unstructured spaces of augmented reality and autonomous robotics, 3D vision is delivering measurable value across industries.

1. Industrial Automation Applications

On the factory floor, 3D vision applications solve problems once impossible with traditional 2D systems. Tasks that required manual intervention can now be handled by 3D vision-powered machines, making operations more efficient and companies more profitable. With better quality inspection, scrap rates fall and businesses gain a competitive advantage.

Material Handling

Robotic bin picking and palletizing/depalletizing relies on 3D vision to handle objects of varying shapes and sizes, calculating optimal grasping points with high success rates. Learn more about robotics solutions →

Precise Dimensioning

3D vision measures package dimensions, weight, and volume with accuracy that rivals manual measurement—enabling dynamic pricing and better shipping method selection. Explore logistics applications →

Quality Inspection

Integrated systems capture complete geometric information to detect surface irregularities, dimensional variations, and assembly issues—catching defects invisible to the human eye.

Worker Safety

Zone monitoring systems create virtual safety boundaries around dangerous machinery, triggering immediate alerts and automatically halting equipment when workers enter restricted areas.

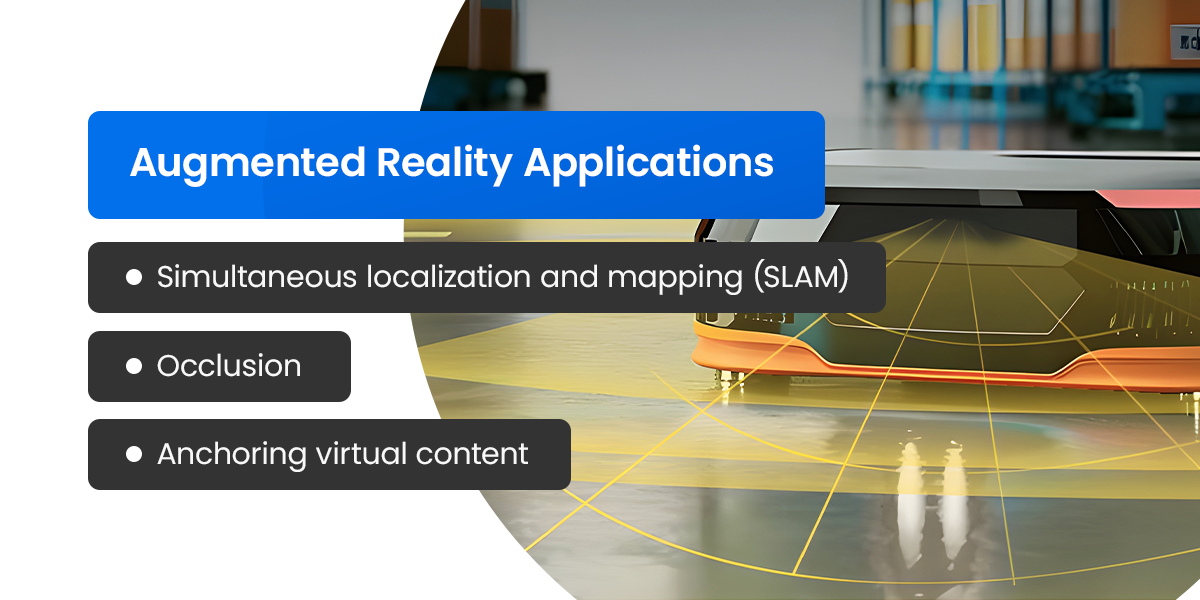

2. Augmented Reality Applications

Augmented reality delivers immersive digital experiences through high-performance 3D vision capabilities. The technology works through three key mechanisms:

- SLAM (Simultaneous Localization and Mapping)

Enables immersive AR experiences by identifying visual features, tracking their movement, calculating the device’s position and orientation, and building a sparse 3D map of the space. Explore SLAM technology →

- Occlusion

Realistic rendering of virtual objects appearing behind physical objects—virtual furniture sits naturally on real floors, and digital annotations align precisely with physical objects.

- Spatial Anchoring

Virtual objects remain fixed relative to specific physical locations, maintaining stability as users move and change viewing angles.

AR applications extend to remote assistance, where technicians receive real-time guidance from experts anywhere in the world, and virtual product try-ons that let customers visualize furniture in their actual living spaces—reducing return rates and improving customer satisfaction.

Orbbec Gemini 2 The compact Gemini 2 provides a wide 91° horizontal field of view and high-quality depth data from 0.15m to 10m—ideal for AR, SLAM, and body scanning applications. With USB 3.0 Type-C for power and data plus IMU support.

3. Autonomous Robotics Applications

3D vision technology powers autonomous robots across diverse applications. Navigation systems use depth information to create real-time environmental maps, identify obstacles, and calculate safe paths. As robots navigate, their vision systems continually update understanding of surroundings, detecting new obstacles and identifying efficient routes.

Precision Docking

Robots approach charging stations or loading docks and align themselves within centimeters for successful connection by detecting docking targets and calculating position and orientation.

Inventory Scanning

Drones equipped with 3D vision autonomously navigate warehouse aisles, scanning product locations and quantities without human assistance.

Delivery Robots

Navigating pathways and sidewalks alongside pedestrians and vehicles, 3D vision enables real-time obstacle detection, pedestrian movement prediction, and safe navigation.

The most robust autonomous systems employ sensor fusion, combining 3D vision with LiDAR for long-range depth information, inertial measurement units for accurate position estimates, and GPS for global positioning in large-scale outdoor operations.

Choose Your 3D Vision Solution

Selecting the right 3D vision solution requires evaluating hardware criteria, environmental constraints, and integration needs.

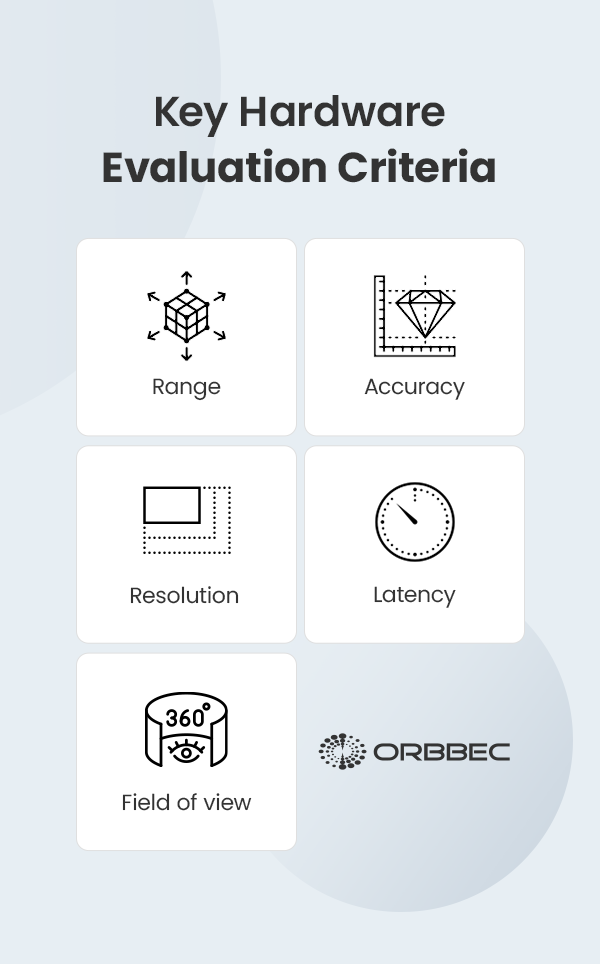

Key Hardware Evaluation Criteria

- Range

The distance over which the system accurately measures depth. Critical for warehouse scanning versus close-proximity bin picking.

- Accuracy

How close measurements are to the true value. Essential for quality inspection and dimensioning tasks.

- Resolution

The density of depth data captured. Affects the system’s ability to detect fine details and small objects.

- Latency

Processing speed for real-time applications. Crucial for robotic control and safety systems.

- Field of View

Coverage area determines whether multiple sensors are necessary for your application.

Working Environment Considerations

Consider real-world challenges when selecting your solution: reflective or dark surfaces may require supplementary lighting; outdoor IR interference affects ToF systems and requires sensors rated for outdoor use; and thermal stability ensures consistent performance across varying temperatures in industrial environments.

Software and Hardware Integration

Orbbec’s mature and comprehensive SDKs—paired with an extensive suite of APIs and robust wrappers across multiple platforms and programming languages—significantly streamline system integration. This cross-platform compatibility allows teams to work in their preferred development environments without sacrificing functionality or performance.

Compared with conventional cameras that transmit raw data to a host for processing, Orbbec’s onboard edge computing shifts much of the perception workload onto the device itself. This architectural approach minimizes latency in the perception layer, reduces the decision system’s processing burden, and delivers faster, more efficient end-to-end performance—enabling real-time responsiveness critical for robotics and industrial automation applications.

Frequently Asked Questions

Achieve Your 3D Vision Goals With Orbbec

Whether you’re implementing bin-picking systems, building immersive AR experiences, or deploying autonomous robots, our experts can help you select the ideal camera for your specific goals.