3D vision is revolutionizing industries and allowing machines to see the world with depth at unprecedented accuracy. For anyone using or planning to use this technology, it’s important to understand each type of camera implementing these technologies and the key performance metrics that define them. Comparing different 3D vision technologies and how they work in the real world can help individuals choose the best one for their application.

This guide will help developers make informed decisions to optimize their 3D vision solutions, whether they’re seasoned engineers or new to the field.

What Are 3D Vision Technologies?

3D vision technologies — encompassing stereo vision, structured light, and time-of-flight (ToF) methods — capture and represent three-dimensional information. Unlike 2D imaging technologies that cannot measure physical dimensions (e.g., size, volume, distance), 3D vision technologies directly capture these geometric properties and therefore significantly enhance environmental perception.

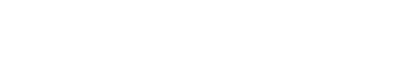

- Stereo vision: One of the core principles of stereo vision is mimicking human sight. Stereo vision uses multiple cameras (usually two) to calculate depth by measuring pixel shifts (disparity) of the same object in different views.

- Structured light: Structured light actively projects a specifically designed pattern onto the scene. The camera captures the pattern’s distortion caused by surface geometry and finally calculates the deformation of the pattern to figure out the depth.

- Time-of-flight (ToF): ToF measures the time it takes for a light signal to travel from the sensor to the object and return, which helps calculate the depth.

The depth data captured from the 3D sensor enables the system to have advanced perception capabilities beyond 2D imaging, supporting tasks such as spatial mapping and object manipulation. The 3D data is typically provided in two forms: a depth map, which is a greyscale image encoding the distance of each pixel from the camera; or a point cloud, which is a set of 3D coordinates. With accurate calibration parameters, depth maps can be projected into 3D point clouds, and vice versa.

Common Applications of 3D Vision Technologies

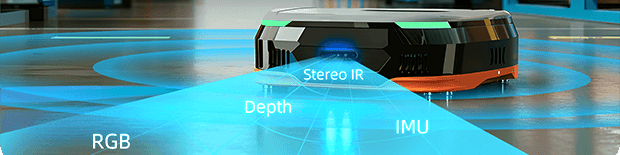

3D vision technologies are adopted across scenarios for their ability to deliver measurable spatial data (e.g., depth maps). It’s commonly used for:

- Defect detection: 3D vision systems provide depth data that enable automated inspection systems to inspect, measure, and find defects in products. They can find sub-visible defects beyond human visual capabilities, controlling product quality and reducing waste.

- Robotics and bin-picking: 3D vision is critical to robots’ perceptions and interactions with their environment. The technology is used in navigation, bin-picking, and collaborative robotics (cobots) that work alongside humans.

- Autonomous navigation: Self-driving vehicles and drones rely on 3D vision to understand their surroundings and navigate safely and efficiently. This includes obstacle detection, lane keeping, and path planning.

- Diagnostics, surgeries, and prosthetics: 3D imaging techniques are used in medical diagnostics, surgical planning, and prosthetic design. For example, 3D technology can be used to reconstruct anatomical structures from CT and MRI scans. Professionals can also create customized prosthetics based on 3D scans of the patient.

- Facial recognition: 3D vision systems significantly improve facial recognition accuracy by capturing depth-based biometrics (e.g., nose bridge profile) that are immune to 2D methods like photos or videos.

Ongoing advancements in 3D sensing, processing, and analysis technologies will likely expand the applications and implementation in different industries. Engineers need to understand the performance metrics of these technologies in order to choose the proper one for their application.

Key Performance Metrics in 3D Vision Technologies

Performance metrics provide a quantifiable understanding of the 3D vision systems’ capabilities and constraints.

Spatial Resolution

Spatial resolution refers to the pixel count of the depth image, defined by its width x height, like 640×480 or 1280×720, determining the granularity of the depth map. A higher spatial resolution results in a more detailed depth map, providing resolution for finer features. However, higher resolution also increases computational demands.

The spatial resolution an application needs will depend on the size and complexity of the target objects. It’s crucial for applications that require precise measurements.

Field of View (FOV)

The field of view represents the angular extent of the scene that the 3D camera can capture. It’s usually specified by horizontal and vertical angles. A wider FOV helps to view a larger area, which is necessary for applications such as navigation. However, a wider FOV can lead to lower spatial resolution and larger geometric distortion at a fixed distance.

Depth Range

Depth range defines the operational range within which a 3D camera can accurately measure distances. This distance span is limited by the sensor’s technology and the working environment. Therefore, choosing the right camera involves matching its technical capabilities to the specific needs of the application. For instance, a longer depth range will be needed for outdoor navigation requirements, while shorter ranges are necessary for close-range scanning tasks.

Accuracy and Precision

In the field of 3D sensing, both accuracy and precision are critical metrics for evaluating the performance of a depth camera:

- Accuracy: Refers to how close a measurement is to the true or actual value. It reflects the system’s ability to produce correct results.

- Precision: Refers to the repeatability or consistency of measurements under the same conditions. It includes two important aspects:

- Spatial precision indicates the consistency of measurements across the entire image plane — meaning the system produces similar depth quality at different locations within the field of view.

- Temporal precision refers to the consistency of depth readings over time at the same location, across multiple frames.

At Orbbec, our 3D cameras are built to meet some of the highest industry standards for both accuracy and precision. We have strict testing standards, scientific testing procedures, advanced in-line testing equipment, and a rigorous quality control system to ensure high-performance 3D sensing across all units.

High accuracy is ideal for applications that need precise measurements, such as dimensioning and robotic bin-picking.

Frame Rate (FPS)

Frame rate, expressed in frames per second (FPS), reveals the number of depth or RGB frames acquired per second. A higher frame rate is recommended for real-time applications like motion tracking or dynamic scene analysis, helping with smoothness and responsiveness. However, increasing the frame rate will also increase the camera’s processing demands and power consumption.

Other Important Metrics

Other metrics to be aware of include:

- Latency: Latency measures the end-to-end delay from capturing images to output usable processed data. Low latency is critical for real-time applications.

- Multicamera interference: Refers to the problems that can arise when using multiple 3D cameras in close proximity to each other, which can cause inaccurate depth readings.

- Power consumption: The energy usage of the camera — especially essential for mobile or embedded applications.

- Calibration stability: How well the camera maintains its calibration over time and under varying conditions.

- Environmental sensitivity: Performance changes due to temperature, lighting, and other environmental factors.

How Do Different 3D Cameras Compare?

Each type of 3D camera has strengths and weaknesses in terms of accuracy, range, power consumption, and cost. Here’s a comparison of the three main types.

Stereo Vision Cameras

By capturing images from different viewpoints, stereo vision cameras can calculate depth based on the difference in pixel position, or disparity, of corresponding points in images. Stereo vision systems can be passive or active:

- Passive: Passive stereo vision systems rely on ambient light available in the working environment for disparity calculation, limiting their reliability in low-light conditions.

- Active: Active stereo vision systems actively employ a projector to create a texture pattern on the target surface. They help with pixel matching in low textures or dimly lit scenes.

Stereo vision camera metrics are heavily influenced by the baseline — the distance between the cameras. A wider baseline offers better depth accuracy. However, it can also increase occlusion — the area visible to one camera but not the other.

Stereo vision cameras are great for AMR and robotic arm applications. When combined, active and passive stereo vision techniques can do well in different lighting conditions. High-quality stereo vision 3D cameras include the Orbbec Gemini 330 Series and the Gemini 2 series — Gemini 2, Gemini 2 L, Gemini 2 XL, and Gemini 215.

ToF Cameras

Time-of-flight cameras measure how long it takes for a light signal to travel from the camera to the object and return. That round-trip time can be used to calculate the distance, capturing depth information efficiently. Orbbec’s ToF cameras include the Femto series — Femto Bolt, Femto Mega, and Femto Mega I.

ToF camera metrics include:

- Modulation frequency, which affects depth resolution.

- Integration time, or exposure time.

- Ambient light rejection, or the ability to filter out ambient light interference.

ToF cameras are less sensitive to texture and lighting variations than stereo vision, but they usually have lower spatial resolution. Their typical applications include gesture recognition, robotic arms, and AR/VR reconstruction. Other time-of-flight applications include industrial automation, healthcare, and automotive safety.

Structured Light Cameras

Structured light cameras actively project a known pattern — such as a grid or series of strips — on the scene and analyze the deformation of the pattern to calculate depth. These cameras offer high accuracy and resolution, making them suitable for getting detailed 3D measurements. Examples include Orbbec’s Astra Mini Pro and Astra 2.

Structured light camera metrics include:

- Pattern density, which affects depth resolution.

- Pattern uniformity, which affects depth accuracy.

- Decoding accuracy, or the ability to accurately extract depth information from the deformed pattern.

Structured light cameras are typically used in controlled indoor environments, as they can be sensitive to ambient light and occlusions. They’re especially helpful in capturing the fine details of objects and are suitable for scenarios such as dimensioning.

Optimizing 3D Vision for Real-World Performance

It’s crucial to know how to optimize a camera’s 3D vision performance for the application. This means addressing practical challenges such as varying environmental conditions, data processing, data interpretation, and interface selection. Here are some common practices.

Handling Variable Lighting Conditions

Real-world applications can bring challenges due to changing lighting. Variations in lighting can impact the accuracy and reliability of the 3D vision system. The effects can be mitigated in many ways:

- Use active illumination: For example, external light sources can be introduced into the system to provide consistent illumination.

- Employ high dynamic range (HDR) function: HDR imaging technology combines multiple images with different exposures of the same scene to capture both bright and dark areas clearly.

- Apply filtering techniques: Filtering techniques can involve applying optical band-pass filters to suppress ambient light interference

.

Different 3D vision technologies come with varying degrees of sensitivity to lighting conditions. For example, structured light systems are generally more sensitive than stereo vision cameras.

Achieving Real-Time Data Processing

Many applications, including automation and robotics, call for real-time performance. This means the 3D vision system must be able to process data quickly enough for quick decision-making and control. Processing speed can be influenced by camera hardware, including the sensor and processor, the complexity of the processing algorithms, and the rate at which data can be transferred.

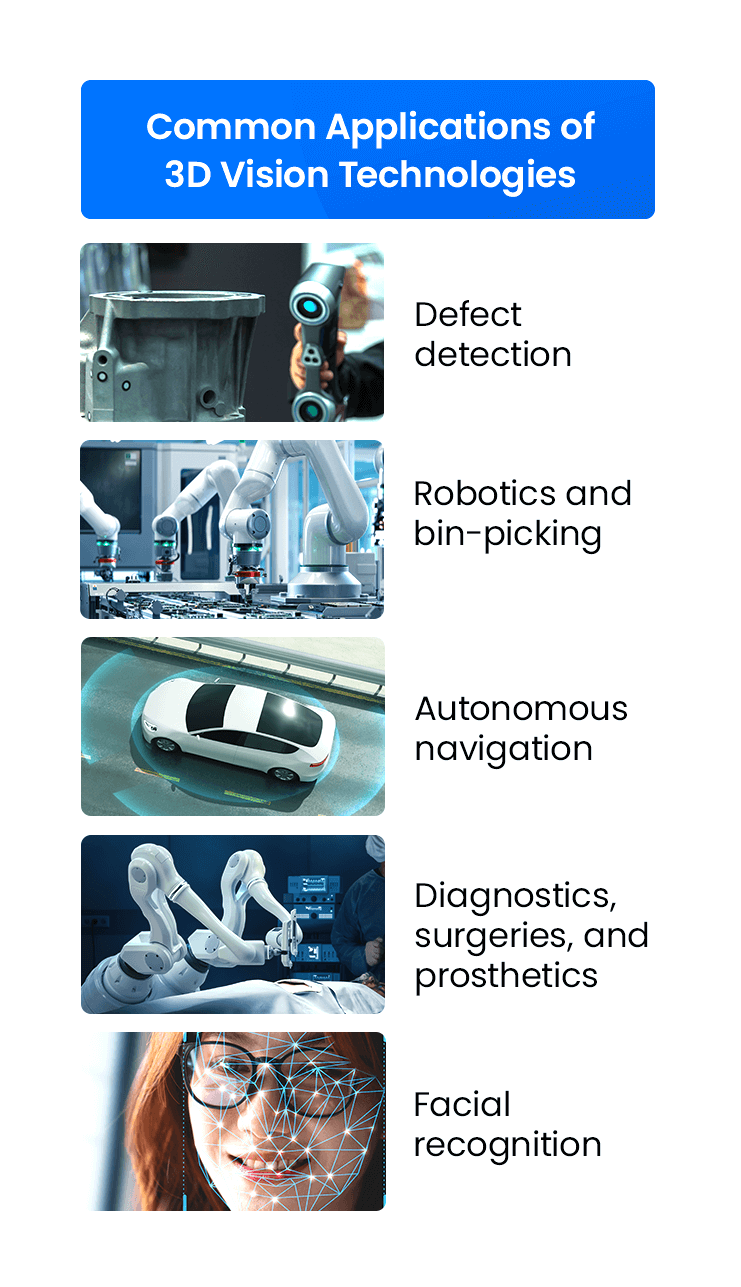

There are many ways to optimize a camera:

- Graphics processing units (GPUs): GPUs are designed specifically for digital image processing, which can handle large volumes of information efficiently. GPU-based software development packages, like CUDA and OpenGL, enable parallel computation by decomposing complex computations into parallel, fine-grained tasks. These GPU-based technologies significantly accelerate 3D data processing, making it ideal for applications requiring real-time performance and high throughput.

- Efficient algorithms: When processing 3D data, it’s important to use highly efficient algorithms to reduce latency and resource consumption. This can include both custom-developed solutions and third-party libraries such as OpenCV for image processing and PCL for point cloud operations. Choosing the right algorithm stack can significantly improve overall system performance, especially on embedded or other low-spec platforms.

- Streamline data transfer pathways: 3D data is typically large in volume, and real-time applications often demand high frame rates, placing significant pressure on the data transmission pipeline. To handle the data and fulfill the application requirements, it is important to optimize the data transmission path from the camera to the processing unit, which involves both hardware and software strategies. On the hardware side, high-bandwidth interfaces, such as USB 3.2, GMSL, or PCIe, can significantly reduce transfer latency and support higher frame rates. On the software side, using lightweight and well-structured protocols — such as ROS2 with Cyclone DDS, UVC or GMSL frame sync — can minimize overhead and enable reliable data delivery for real-time processing.

- Built-in depth processing ASIC: By implementing in-camera depth engine ASIC, the depth data computation and corresponding postprocessing, like noise filtering and D2C alignment, can be finished inside the camera. This can reduce latency and dependency on external computing resources. One typical example is the ASIC MX6800 used in the Orbbec Gemini 330 series.

Interpreting 3D Data for Actionable Insights

3D cameras generate various types of data, from depth maps to point clouds and meshes. This data, represented in different data structures, must be processed and analyzed properly to extract meaningful insights. This involves a range of data and image processing techniques depending on the applications, including:

- Filtering to remove noise.

- Segmentation to identify objects of interest.

- Feature extraction to detect key characteristics.

In summary, the goal is to convert the 3D data into actionable insights for tasks like defect detection, object recognition, and autonomous navigation. This goal requires reliable input, which makes evaluating sensor performance a critical step in the process.

Choosing Camera Interfaces for Performance

As mentioned in the previous section, the type of camera interface can greatly impact the 3D vision system performance — especially when it comes to bandwidth, latency, distance, and power consumption. Common interfaces include:

- GMSL (Gigabit multimedia serial link): GMSL interface, like the ones used in the Orbbec Gemini 335Lg, offers high bandwidth and low latency, making it ideal for transmitting uncompressed image streams over some distances. It is perfectly suitable for projects that require high-resolution and high-frame-rate data streams. Its robust signal integrity also enables reliable performance in automotive and robotics environments.

- USB 3.0: USB 3.0 is a common interface. It’s used across the Orbbec Gemini 330 series, excluding the Gemini 335Le, offering a good balance of bandwidth and ease of integration. It works well for general-purpose applications and rapid prototyping. However, in demanding or long-time operating environments — such as mobile platforms on uneven terrain— these connectors may be more prone to disconnection.

- Ethernet/PoE (Power over Ethernet): Ethernet interface provides long cable lengths, robust data transmission, and the convenience of power over Ethernet, as seen in the Orbbec Gemini 335Le. It’s well-suited for distributed multicamera setups and industrial environments. However, compared to GMSL and USB 3.0, Ethernet generally has higher latency and may pose bandwidth limitations for high-resolution, high-frame-rate 3D data.

Key characteristics of each interface include:

| GMSL | USB 3.0 | Ethernet/PoE | |

|---|---|---|---|

| Bandwidth | High | Medium | Low |

| Latency | Low | Medium | High |

| Extensibility | High | Medium | Low |

| Cable Length | Medium | Low | High |

| Power Consumption | Low | Medium | High |

Project requirements can guide the interface decision, considering factors like bandwidth, latency, extensibility, cable length, and power consumption.

Choose the Right 3D Vision System

Choosing the right 3D vision camera involves not only evaluating technical specifications but also considering practical integration challenges such as hardware compatibility, data interface, software ecosystem and support availability. A structured comparison framework can help assess different 3D vision technologies more effectively. Key factors to consider include

- Working environment, such as lighting, temperature, dust/water, and electromagnetic interference.

- Object characteristics, such as size, shape, material, and surface reflectivity.

- Performance needs, such as speed, accuracy, and precision.

- System consumption limitation, such as memory, CPU/GPU usage, and power consumption.

Additionally, some automation integration challenges can be overcome by choosing the right interfaces, whether GMSL, USB, or Ethernet.

Unlock the Power of 3D Vision With Orbbec

Knowing and understanding the performance metrics of 3D vision technology can help individuals choose the best system for their application. Since 2013, Orbbec has democratized 3D vision, offering high-performance products and full-stack solutions for developers in robotics, manufacturing, and more. Our experts have extensive knowledge and experience in many industries. They can help find the best product for the project goals.

Order one of our cameras today to test out the features and see how it can transform your project.