Autonomous mobile robots (AMRs) need strong perception systems to interact with their surroundings. Light detection and ranging (LiDAR) and RGB-Depth (RGB-D) cameras are two types of sensors that help AMRs navigate and position themselves in spaces. The choice between LiDAR and RGB-D cameras is important — each sensor modality offers different advantages in terms of depth acquisition, spatial resolution, and environment adaptability.

Robotics engineers and automation specialists might even combine the sensors for a high-performance solution, allowing robots to navigate more complex spaces and perform tasks reliably. This article will discuss LiDAR and RGB-D cameras in depth, including their differences, similarities, and synergistic potential for use in AMRs.

The Role of Perception in AMRs

Visual sensors and cameras mimic human vision and allow AMRs to perceive their environment and any space they move into. The sensors capture, perceive, and interpret light in the AMR’s surroundings, converting it to a digital signal to be analyzed and processed. This allows AMRs to make navigation decisions based on what they “see” — much like humans do.

In contrast to traditional sensors used in AMRs, visual sensors can create an image of their surroundings and note intricate features within that environment. They capture images in high resolution, with videos taken to recognize and detect objects, like hazardous ones or items that might need to be transported. Some visual cameras also use AI-enabled vision, which gives AMRs the power to read signs, understand visual cues, and perform tasks that require a higher degree of intelligence.

LiDAR Fundamentals and Use in Robotics

LiDAR in robotics is a remote sensing technology that allows robots to perceive their environment, navigate, and interact with objects. LiDAR technology uses laser light to measure distances and create high-resolution 3D maps of a space, including objects in it. It emits laser pulses and measures the time-of-flight (ToF) or phase shift of the reflected signals, using this information to calculate the distance, speed, and other properties of target objects.

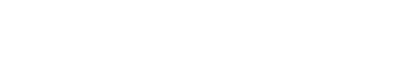

LiDAR is often used in robotics for:

- Simultaneous localization and mapping (SLAM): LiDAR data is essential for SLAM, a technique in which robots map their environment and track their position on the map simultaneously. The robot continuously scans and analyzes its environment, helping it build a more detailed map to avoid obstacles and navigate quickly.

- Real-time obstacle detection: LiDAR excels at real-time obstacle detection, even in low light. It can identify and react to obstacles to quickly and safely navigate a space, even in constantly changing environments.

- Navigating structured and unstructured environments: LiDAR’s 3D sensing capabilities allow robots to navigate both well-defined structured spaces like warehouses, as well as complex unstructured environments like off-road terrain. These LiDAR-equipped robots can adapt quickly to evolving surroundings and plan their paths accordingly.

LiDAR technology is often used in autonomous vehicles, such as self-driving cars, drones, and service robots. While it offers exceptional range and accuracy, the technology can be sensitive to rain, fog, and highly reflective surfaces.

RGB-D Camera Basics and Capabilities

RGB-D cameras combine traditional color imaging with depth perception, giving AMRs the rich data they need to perceive their surroundings and avoid obstacles. The technique works by using structured light or ToF to capture depth information alongside standard RGB images, allowing RGB-D cameras to create dense, real-time 3D scene models.

RGB-D cameras are often used in industrial automation, deployed for tasks like:

- Object recognition: Robotic systems with RGB-D cameras can more accurately detect objects, as they deliver precise depth data. They can more easily distinguish between objects from their backgrounds and recognize similar color profiles while offering accurate depth measurements — even in cluttered spaces.

- Bin picking: RGB-D cameras can analyze the position and angles of different objects, allowing them to pick objects from bins, pallets, conveyors, and floors with exceptional accuracy.

- Human-robot collaboration: RGB-D cameras allow robots to recognize gestures and understand the 3D structure of human movements, thus allowing them to engage more intuitively with humans. These robots can automate laborious work, reducing safety hazard exposures and injury risks.

Both color and depth cues are crucial for these tasks, making RGB-D cameras a perfect fit. While these cameras often provide high resolution at close range and richer contextual information, they can be limited by ambient lighting and a shorter effective range.

RGB-D vs. LiDAR: Core Differences and Similarities

While similar in their 3D perception abilities, LiDAR and RGB-D cameras differ in their underlying technology and capabilities. Here are the main similarities and differences between RGB-D cameras vs. LiDAR cameras.

Similarities

LiDAR and RGB-D cameras share the following capabilities:

- 3D perception: Both sensor types generate spatial data that allows them to reconstruct 3D environments. They can both perform tasks like volumetric mapping, surface modeling, and spatial reasoning.

- Object detection and recognition: Both LiDAR and RGB-D systems can be integrated with machine learning and computer vision algorithms to perform object detection, classification, and pose estimation for a more advanced understanding of the scene.

- Mapping and navigation: Both cameras are widely used in SLAM frameworks, providing the necessary spatial awareness to navigate autonomously, plan paths, and avoid obstacles in real time.

Differences

LiDAR and RGB-D cameras differ in the following ways:

- Depth perception methods: While LiDAR uses ToF laser beams to measure distances and create point clouds, RGB-D cameras usually use optical triangulation or ToF with infrared light. The depth data resolution and working distance differ due to the differences between depth computation methods.

- Depth accuracy: LiDAR tends to offer better depth accuracy than RGB-D. The method is also more resilient to changing lighting conditions. However, RGB-D cameras are often more affordable but are impacted by lighting and have lower depth accuracy.

- Obstacle detection and environmental adaptability: Since LiDAR sensors are less affected by ambient light conditions, they are often ideal for outdoor or variable lighting and do well in large-scale environments. In contrast, RGB-D cameras usually offer enhanced mapping accuracy in controlled, indoor environments with rich visual features.

Key Differences Summarized

Key differences of these cameras include:

| Feature | LiDAR | RGB-D Camera |

|---|---|---|

| Technology | Laser-based distance measurement | Color camera + depth sensor, often infrared |

| Accuracy | High | Potentially lower, especially at longer ranges |

| Lighting | Works in most conditions | Affected by strong sunlight or low light |

| Cost | Higher | Lower |

| Data | Dense point clouds | Color images and depth maps |

| Applications | Autonomous driving, robotics, surveying | Robotics, augmented reality, some 3D modeling |

Sensor Fusion: How LiDAR and RGB-D Cameras Work Together in AMR

While sensor fusion can significantly enhance perception, it also introduces challenges such as synchronization, calibration drift, and increased computational load. Robust software frameworks and efficient fusion algorithms are essential to overcome these issues in real-world deployments. Advanced fusion algorithms, like Kalman filtering (provided in OrbbecSDK), can merge the data of both LiDAR and RGB-D camera sensors.

Together, LiDAR and RGB-D cameras in AMRs can provide a more comprehensive view of an environment and navigate more efficiently. LiDAR can create 3D maps and measure distances, while RGB-D cameras provide rich color and texture data. Combining these sensor types allows robots to move through spaces with greater ease, recognize objects, and avoid obstacles for a more efficient operation.

RGB and LiDAR Fusion Techniques

Kalman filtering estimates the state of a robot, like its position, velocity, and orientation. It makes a prediction of the system’s state based on its past state and control inputs, like acceleration, to determine where the system might be going at the next time step. The filter then takes a new measurement, which is just a noisy observation of the actual state, like its position and speed, using it to update its prediction and get a better estimate of the true state of the robot.

This approach can help calibrate and synchronize LiDAR and RGB-D sensor data to improve SLAM and object detection. It can also enhance semantic mapping, which involves creating maps with spatial information and semantic information, like object labels and scene context. This way, robots can better understand objects, where they are located, and their relationships to each other.

Applications and Performance in Autonomous Mobile Robotics

Integrating LiDAR and RGB-D cameras on AMRs can benefit many industries, from manufacturing to commercial services. Applications and use cases for these combined sensor types include:

- Manufacturing and warehouse automation: In logistics and warehouse automation, LiDAR is often used for global navigation and collision avoidance, while RGB-D cameras enable fine-grained object recognition and manipulation.

- Commercial and service robotics: Service robots benefit from the fusion of LiDAR and RGB-D data for tasks like dynamic obstacle avoidance, semantic scene understanding, and people tracking in complex environments with a lot of people.

- Healthtech: In hospitals and other health care environments, AMRs with LiDAR and RGB-D can capture human body shape and movement patterns to assess their health status, personalize treatment plans, and track progress.

- Retail and inventory management: AMRs with LiDAR can be used in retail spaces to navigate and map out stores. RGB-D cameras can help with shelf scanning, identifying products, and monitoring stock levels. Combining the two can provide retail workers with inventory audits to support efficient restocking.

Sensor Selection Criteria for AMR Applications

Choosing the right sensor for AMR deployment will require careful consideration of the specific application’s requirements and any constraints. Engineers will need to ensure that the sensor’s characteristics, such as range and resolution, can meet the operational environment’s needs and the complexity of the task.

Specifications that will need to be assessed to match the sensor to the application include:

Camera-Determined Factors

Camera-determined factors to consider include range, resolution, FOV, runtime, and operating temperature.

- Range: This is the max and min distances between which a sensor can reliably detect objects. For instance, LiDAR units might offer wide detection ranges of around 45 meters, which is necessary for high-speed navigation in larger warehouses or outdoor facilities. RGB-D cameras usually have closer ranges, such as 0.25 to 5.46 meters, making them effective for close-proximity tasks like bin picking or human-robot interaction.

- Resolution: Resolution is the density of the spatial data points captured by the sensor. A higher resolution allows more precise mapping and object recognition, but it might require greater computational resources if real-time processing is needed.

- Field of view (FOV): FOV is the angular extent of the observable environment. A wide FOV allows the AMR to perceive more of its surroundings at once, reducing blind spots and improving situational awareness.

- Frame rate and latency: The sensor must sustain the required frames per second (fps) at target resolution and exposure settings with predictable end-to-end latency. Higher fps and low latency are generally suitable for collision avoidance and high-speed navigation. For fine manipulation, prioritize consistent timing and low jitter over raw fps and trade frame rate for resolution and stability when needed.

- Operating temperature: The sensor must operate reliably across the set ambient range and account for thermal drift. Check storage temperature and humidity and condensation tolerance, and determine whether accuracy degrades near temperature extremes or requires warmup stabilization.

Development Factors

Consider the following development factors to choose the right sensor:

- Camera price: LiDAR sensors, especially those with high resolution and long range, tend to cost more due to their advanced optics and mechanical components. RGB-D cameras, on the other hand, benefit from consumer electronics economies of scale and are generally more affordable.

- Ease of software integration: Integration includes the availability of drivers and software development kits (SDKs) and support for processing real-time data.

- Hardware design space and dimensions: Verify envelope, weight, mounting patterns, and connector orientation so the sensor does not create occlusions or exceed the robot’s height and width constraints. Consider recessed mounts and service access for cleaning lenses or swapping units. It is also important to consider how easy it is to physically mount, electrically connect, and interface with the robot’s onboard computer and software stack.

- Heat dissipation: Confirm typical and peak thermal loads and how heat is removed — conduction to chassis, heatsink, or forced airflow. Derate performance in sealed enclosures. Add thermal pads or heat spreaders as needed and validate that hot surfaces remain within touch-safe limits.

- Power consumption: Capture average, peak, and inrush current. Validate compatibility with rail and power budget under worst-case loads. Model the impact on battery life and ensure an adequate margin for brownout events.

- Data and power interface options: Choose interfaces that match bandwidth and cable-length needs. Check connector type, IP rating, locking mechanisms, sync/trigger pins, and EMI susceptibility.

- Scalability and flexibility: AMR sensor selection should prioritize upgradeability. Sensors must be easily upgraded or replaced as technology advances. This ensures adaptability to evolving perception demands and allows for performance improvements throughout the AMR’s life cycle. Sensor technology should promote cost-effective, scalable deployment across large AMR fleets with minimal integration complexity.

Product-Level Factors

These can include operating environment and product maintenance needs.

- Operating environment: Consider the sensor’s durability, looking closely at its IP rating, shock/vibration, ambient light/sunlight immunity, and multi-camera interference robustness. For AMRs operating in industrial or outdoor spaces, sensors might also be strong enough to handle accidental impacts, constant movement, and exposure to weather, dust, or debris.

- Product maintenance: Field calibration is the process of adjusting sensor parameters like focus and alignment to get the most accurate data output after installing the unit. Sensors that are easy to calibrate can mean less downtime and maintenance costs.

Frequently Asked Questions

Here are answers to frequently asked questions about LiDAR and RGB-D cameras.

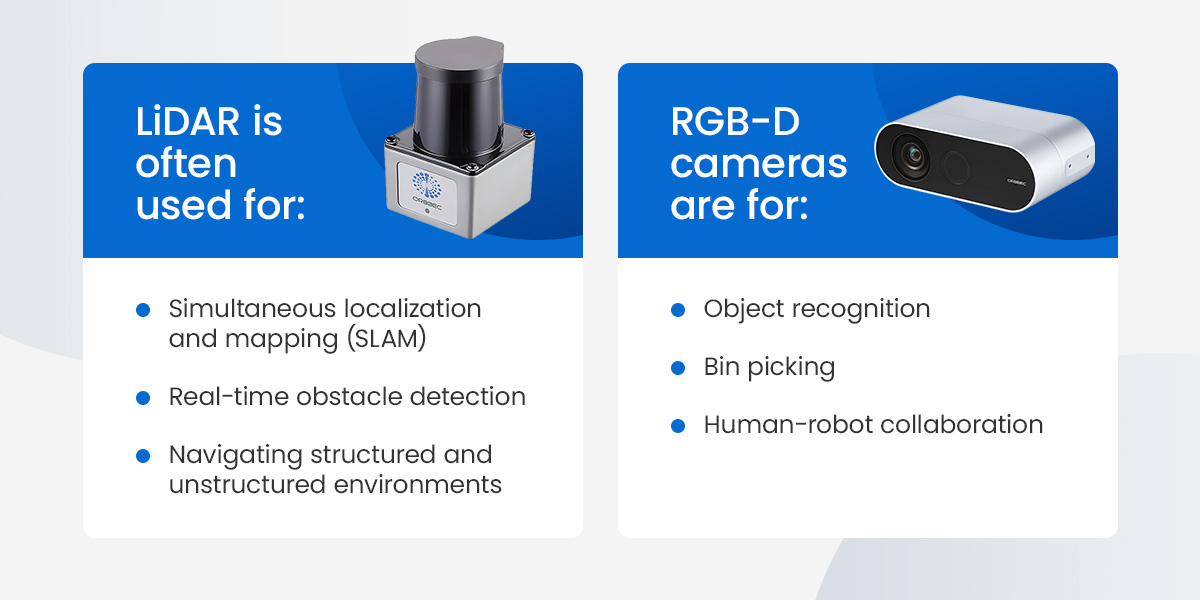

1. How Is LiDAR Used in Robotics?

LiDAR is primarily used for high-precision mapping, localization, and obstacle detection, enabling AMRs to navigate safely and efficiently in dynamic environments.

2. What Is the Difference Between LiDAR and RGB-D Cameras?

LiDAR provides direct depth measurements through the use of laser pulses, while RGB-D cameras combine a standard RGB camera with a depth sensor like ToF to generate a depth map alongside a color image.

3. Can RGB-D and LiDAR Be Used Together?

LiDAR and RGB-D cameras can work together to enhance AMRs’ perception accuracy and overall strength in demanding operational applications.

Explore Orbbec’s 3D Vision Solutions

Choosing and integrating the right perception sensors can be challenging in AMR development applications. Understanding the differences, synergies, and integration methods for LiDAR and RGB-D cameras can help robotics professionals unlock new levels of performance and reliability.

Orbbec’s advanced RGB-D and LiDAR cameras can help your team achieve the perception, reliability, and efficiency your AMR deployment needs. Orbbec’s 3D vision experts have extensive knowledge of your industry and can help you find the right product for your project goals.

Orbbec also fosters a community for technical support where users can ask questions, find answers, and share knowledge for a smoother experience with their products. Our dedication to the entire product life cycle also includes development kits and innovative solutions to help streamline the development process for AMRs.

Contact Orbbec today to discuss your AMR sensor needs or explore robotics solutions.